A Generative AI Engineer is developing a patient-facing healthcare-focused chatbot. If the patient’s question is not a medical emergency, the chatbot should solicit more information from the patient to pass to the doctor’s office and suggest a few relevant pre-approved medical articles for reading. If the patient’s question is urgent, direct the patient to calling their local emergency services.

Given the following user input:

“I have been experiencing severe headaches and dizziness for the past two days.”

Which response is most appropriate for the chatbot to generate?

A Generative AI Engineer is developing an LLM application that users can use to generate personalized birthday poems based on their names.

Which technique would be most effective in safeguarding the application, given the potential for malicious user inputs?

A Generative Al Engineer is building an LLM-based application that has an

important transcription (speech-to-text) task. Speed is essential for the success of the application

Which open Generative Al models should be used?

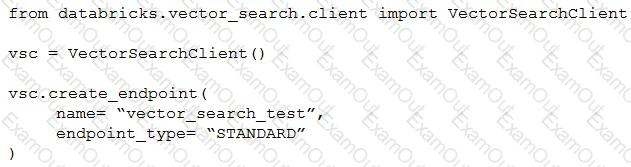

A Generative AI Engineer I using the code below to test setting up a vector store:

Assuming they intend to use Databricks managed embeddings with the default embedding model, what should be the next logical function call?

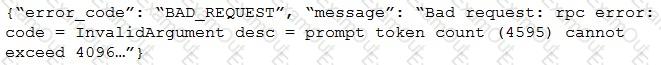

After changing the response generating LLM in a RAG pipeline from GPT-4 to a model with a shorter context length that the company self-hosts, the Generative AI Engineer is getting the following error:

What TWO solutions should the Generative AI Engineer implement without changing the response generating model? (Choose two.)

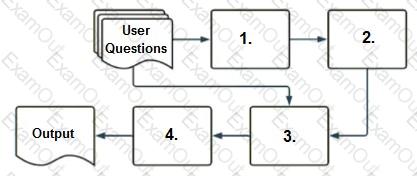

A company has a typical RAG-enabled, customer-facing chatbot on its website.

Select the correct sequence of components a user's questions will go through before the final output is returned. Use the diagram above for reference.

What is an effective method to preprocess prompts using custom code before sending them to an LLM?

A Generative Al Engineer is working with a retail company that wants to enhance its customer experience by automatically handling common customer inquiries. They are working on an LLM-powered Al solution that should improve response times while maintaining a personalized interaction. They want to define the appropriate input and LLM task to do this.

Which input/output pair will do this?