A data scientist of an e-commerce company is working with user data obtained from its subscriber database and has stored the data in a DataFrame df_user. Before further processing the data, the data scientist wants to create another DataFrame df_user_non_pii and store only the non-PII columns in this DataFrame. The PII columns in df_user are first_name, last_name, email, and birthdate.

Which code snippet can be used to meet this requirement?

A data engineer is reviewing a Spark application that applies several transformations to a DataFrame but notices that the job does not start executing immediately.

Which two characteristics of Apache Spark's execution model explain this behavior?

Choose 2 answers:

A data engineer uses a broadcast variable to share a DataFrame containing millions of rows across executors for lookup purposes. What will be the outcome?

44 of 55.

A data engineer is working on a real-time analytics pipeline using Spark Structured Streaming.

They want the system to process incoming data in micro-batches at a fixed interval of 5 seconds.

Which code snippet fulfills this requirement?

41 of 55.

A data engineer is working on the DataFrame df1 and wants the Name with the highest count to appear first (descending order by count), followed by the next highest, and so on.

The DataFrame has columns:

id | Name | count | timestamp

---------------------------------

1 | USA | 10

2 | India | 20

3 | England | 50

4 | India | 50

5 | France | 20

6 | India | 10

7 | USA | 30

8 | USA | 40

Which code fragment should the engineer use to sort the data in the Name and count columns?

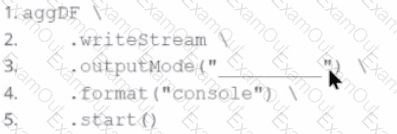

In the code block below, aggDF contains aggregations on a streaming DataFrame:

Which output mode at line 3 ensures that the entire result table is written to the console during each trigger execution?

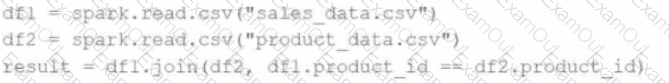

A data engineer writes the following code to join two DataFrames df1 and df2:

df1 = spark.read.csv("sales_data.csv") # ~10 GB

df2 = spark.read.csv("product_data.csv") # ~8 MB

result = df1.join(df2, df1.product_id == df2.product_id)

Which join strategy will Spark use?

40 of 55.

A developer wants to refactor older Spark code to take advantage of built-in functions introduced in Spark 3.5.

The original code:

from pyspark.sql import functions as F

min_price = 110.50

result_df = prices_df.filter(F.col("price") > min_price).agg(F.count("*"))

Which code block should the developer use to refactor the code?

45 of 55.

Which feature of Spark Connect should be considered when designing an application that plans to enable remote interaction with a Spark cluster?

A Spark DataFrame df is cached using the MEMORY_AND_DISK storage level, but the DataFrame is too large to fit entirely in memory.

What is the likely behavior when Spark runs out of memory to store the DataFrame?