A Spark application suffers from too many small tasks due to excessive partitioning. How can this be fixed without a full shuffle?

Options:

A developer is working with a pandas DataFrame containing user behavior data from a web application.

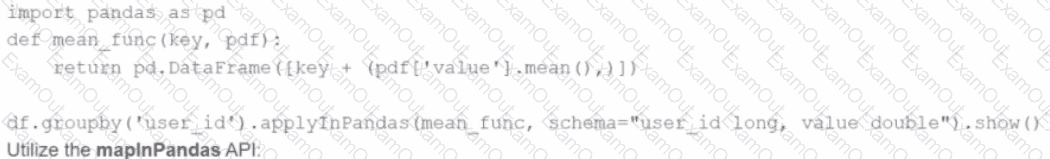

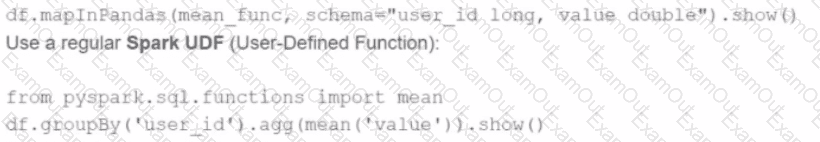

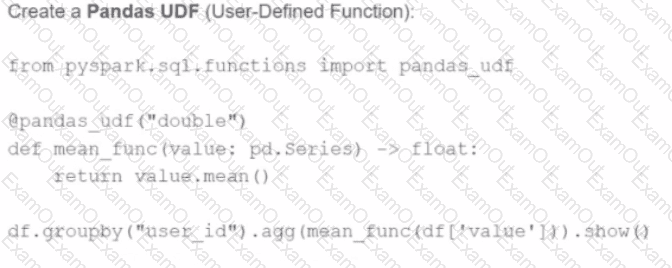

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

D)

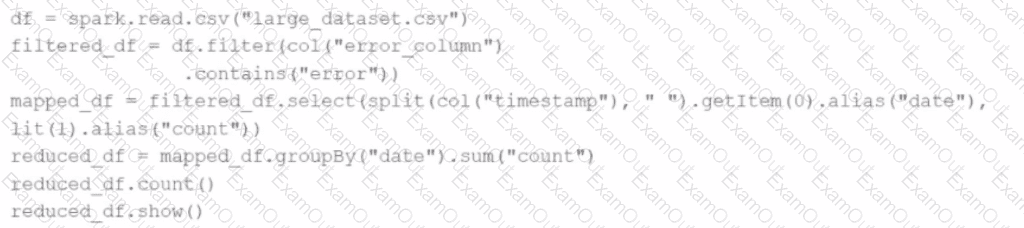

Given the code:

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"), lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

How can a Spark developer ensure optimal resource utilization when running Spark jobs in Local Mode for testing?

Options:

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set for spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

15 of 55.

A data engineer is working on a Streaming DataFrame (streaming_df) with the following streaming data:

id

name

count

timestamp

1

Delhi

20

2024-09-19T10:11

1

Delhi

50

2024-09-19T10:12

2

London

50

2024-09-19T10:15

3

Paris

30

2024-09-19T10:18

3

Paris

20

2024-09-19T10:20

4

Washington

10

2024-09-19T10:22

Which operation is supported with streaming_df?

23 of 55.

A data scientist is working with a massive dataset that exceeds the memory capacity of a single machine. The data scientist is considering using Apache Spark™ instead of traditional single-machine languages like standard Python scripts.

Which two advantages does Apache Spark™ offer over a normal single-machine language in this scenario? (Choose 2 answers)

12 of 55.

A data scientist has been investigating user profile data to build features for their model. After some exploratory data analysis, the data scientist identified that some records in the user profiles contain NULL values in too many fields to be useful.

The schema of the user profile table looks like this:

user_id STRING,

username STRING,

date_of_birth DATE,

country STRING,

created_at TIMESTAMP

The data scientist decided that if any record contains a NULL value in any field, they want to remove that record from the output before further processing.

Which block of Spark code can be used to achieve these requirements?

3 of 55. A data engineer observes that the upstream streaming source feeds the event table frequently and sends duplicate records. Upon analyzing the current production table, the data engineer found that the time difference in the event_timestamp column of the duplicate records is, at most, 30 minutes.

To remove the duplicates, the engineer adds the code:

df = df.withWatermark("event_timestamp", "30 minutes")

What is the result?

A data engineer is running a Spark job to process a dataset of 1 TB stored in distributed storage. The cluster has 10 nodes, each with 16 CPUs. Spark UI shows:

Low number of Active Tasks

Many tasks complete in milliseconds

Fewer tasks than available CPUs

Which approach should be used to adjust the partitioning for optimal resource allocation?