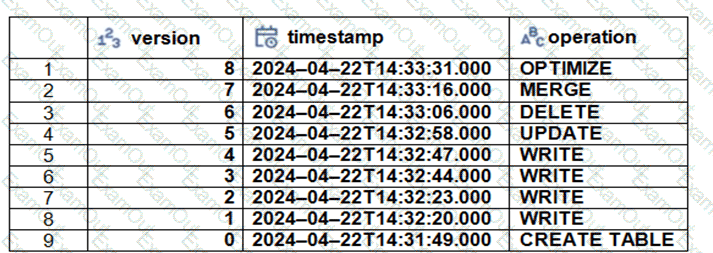

The Delta transaction log for the ‘students’ tables is shown using the ‘DESCRIBE HISTORY students’ command. A Data Engineer needs to query the table as it existed before the UPDATE operation listed in the log.

Which command should the Data Engineer use to achieve this? (Choose two.)

In which of the following file formats is data from Delta Lake tables primarily stored?

A dataset has been defined using Delta Live Tables and includes an expectations clause:

CONSTRAINT valid_timestamp EXPECT (timestamp > '2020-01-01') ON VIOLATION FAIL UPDATE

What is the expected behavior when a batch of data containing data that violates these constraints is processed?

A data engineer needs access to a table new_uable, but they do not have the correct permissions. They can ask the table owner for permission, but they do not know who the table owner is.

Which approach can be used to identify the owner of new_table?

Which of the following commands can be used to write data into a Delta table while avoiding the writing of duplicate records?

A data engineer is reviewing the documentation on audit logs in Databricks for compliance purposes and needs to understand the format in which audit logs output events.

How are events formatted in Databricks audit logs?

A data engineering team is using Kafka to capture event data and then ingest it into Databricks. The team wants to be able to see these historical events. Medallion architecture is already in place. The team wants to be mindful of costs.

Where should this historical event data be stored?

Which of the following must be specified when creating a new Delta Live Tables pipeline?

Which of the following statements regarding the relationship between Silver tables and Bronze tables is always true?

A data engineer is developing an ETL process based on Spark SQL. The execution fails. The data engineer checks the Spark Ul and can see the ERRORS as follows:

Which two corrective actions should the data engineer perform to resolve this issue?

Choose 2 answers - (Q) Narrow the filters in order to collect less data in the query