Perform the following tasks:

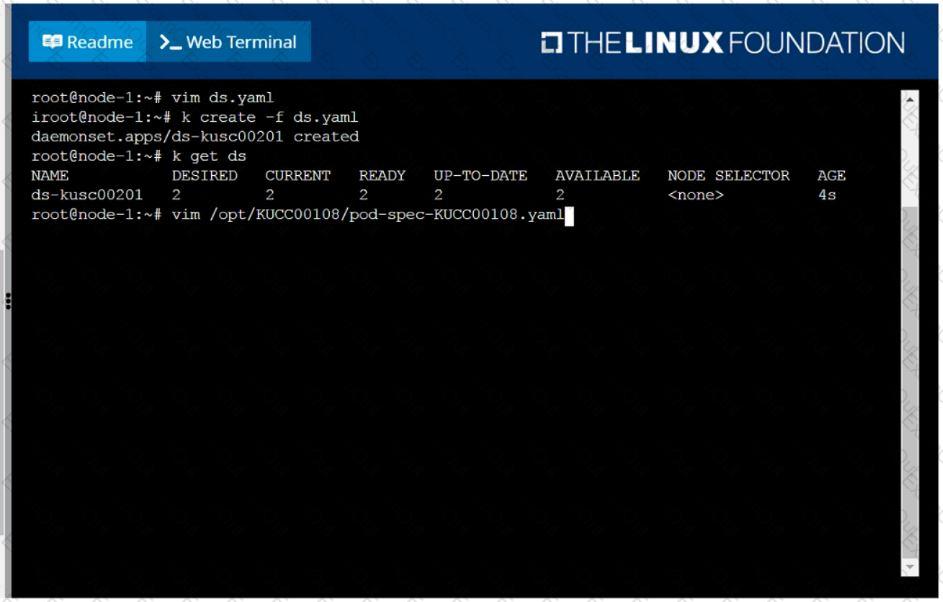

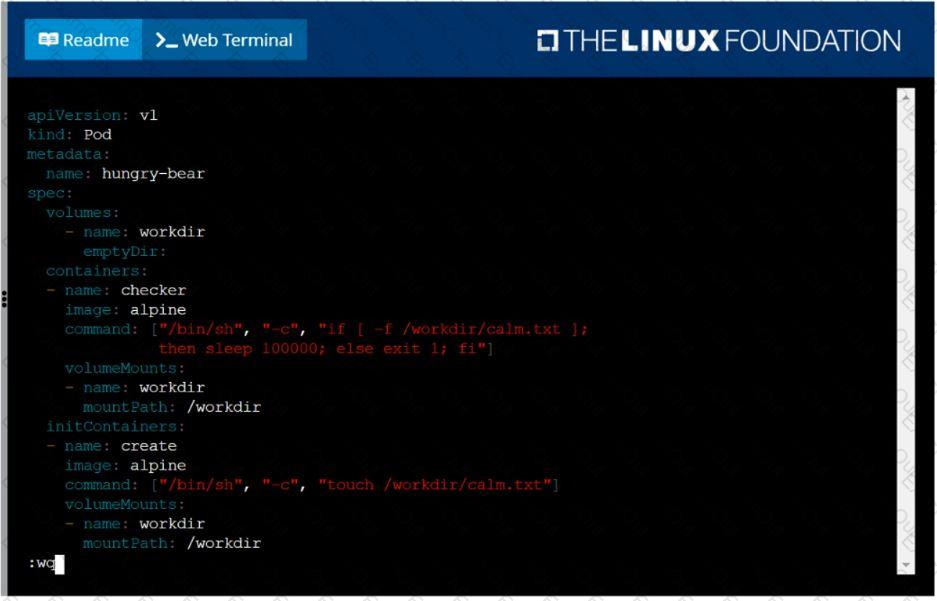

Add an init container to hungry-bear (which has been defined in spec file /opt/KUCC00108/pod-spec-KUCC00108.yaml)

The init container should create an empty file named/workdir/calm.txt

If /workdir/calm.txt is not detected, the pod should exit

Once the spec file has been updated with the init container definition, the pod should be created

Task

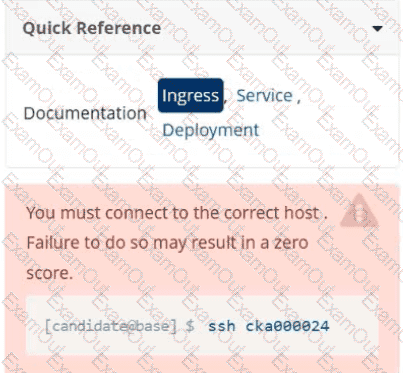

Create a new Ingress resource as follows:

. Name: echo

. Namespace : sound-repeater

. Exposing Service echoserver-service on

http://example.org/echo using Service port 8080

The availability of Service

echoserver-service can be checked

i

using the following command, which should return 200 :

[candidate@cka000024] $ curl -o /de v/null -s -w "%{http_code}\n" http://example.org/echo

Score: 4%

Task

Create a persistent volume with name app-data , of capacity 1Gi and access mode ReadOnlyMany. The type of volume is hostPath and its location is /srv/app-data .

Score: 7%

Task

Reconfigure the existing deployment front-end and add a port specification named http exposing port 80/tcp of the existing container nginx.

Create a new service named front-end-svc exposing the container port http.

Configure the new service to also expose the individual Pods via a NodePort on the nodes on which they are scheduled.

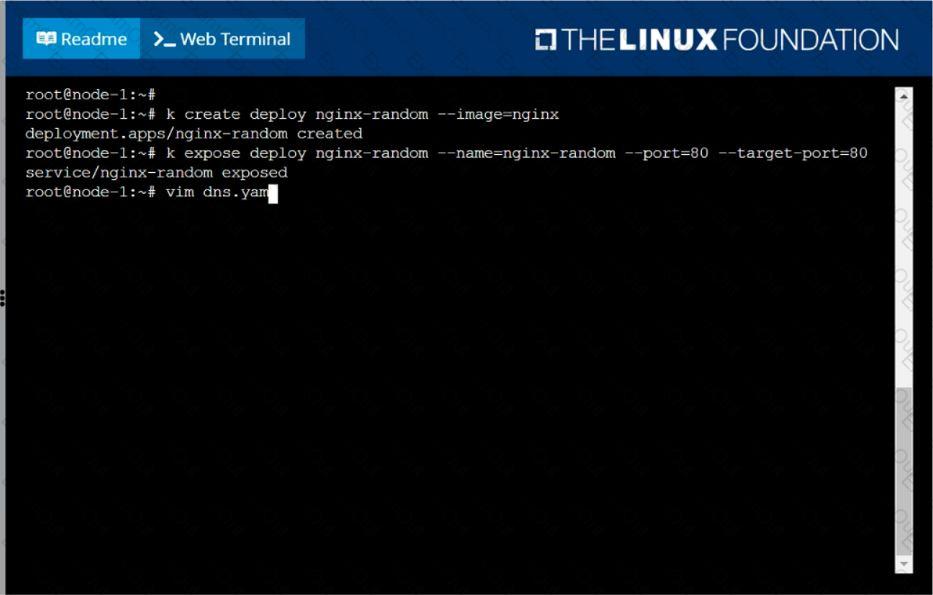

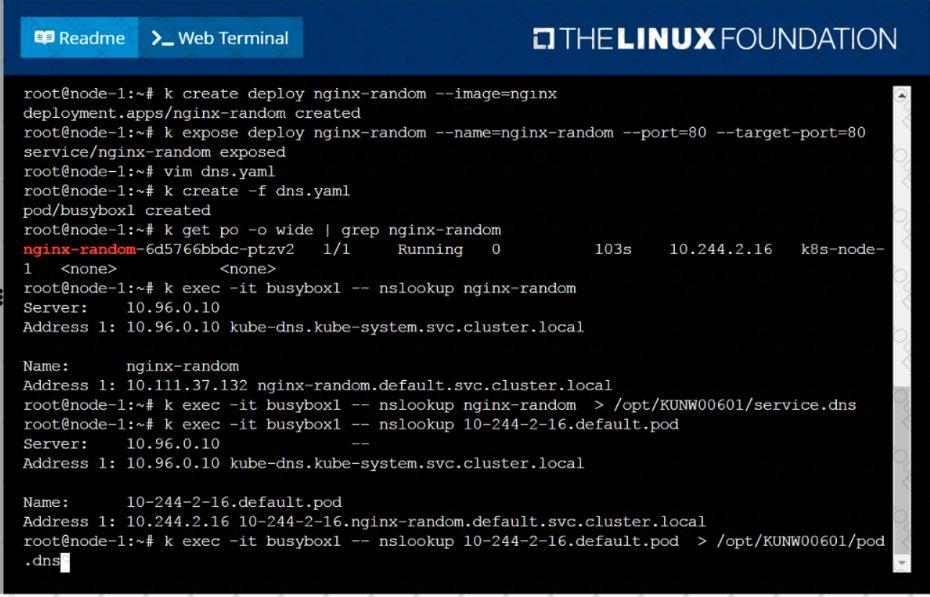

Create a deployment as follows:

Name: nginx-random

Exposed via a service nginx-random

Ensure that the service and pod are accessible via their respective DNS records

The container(s) within any pod(s) running as a part of this deployment should use the nginx Image

Next, use the utility nslookup to look up the DNS records of the service and pod and write the output to /opt/KUNW00601/service.dns and /opt/KUNW00601/pod.dns respectively.

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000059

Context

A kubeadm provisioned cluster was migrated to a new machine. It needs configuration changes to

run successfully.

Task

Fix a single-node cluster that got broken during machine migration.

First, identify the broken cluster components and investigate what breaks them.

The decommissioned cluster used an external etcd server.

Next, fix the configuration of all broken cluster

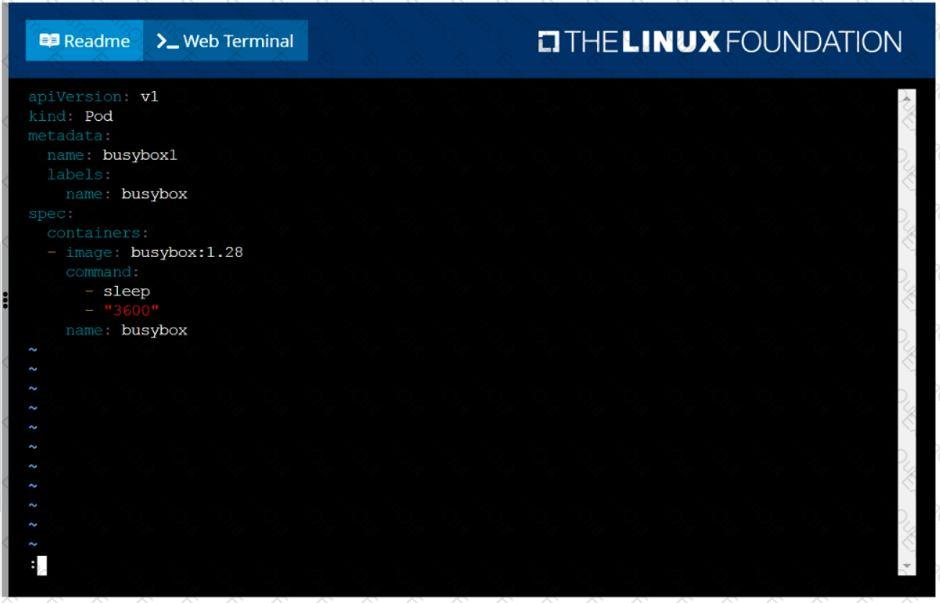

Create a busybox pod and add “sleep 3600” command

List all the pods showing name and namespace with a json path expression

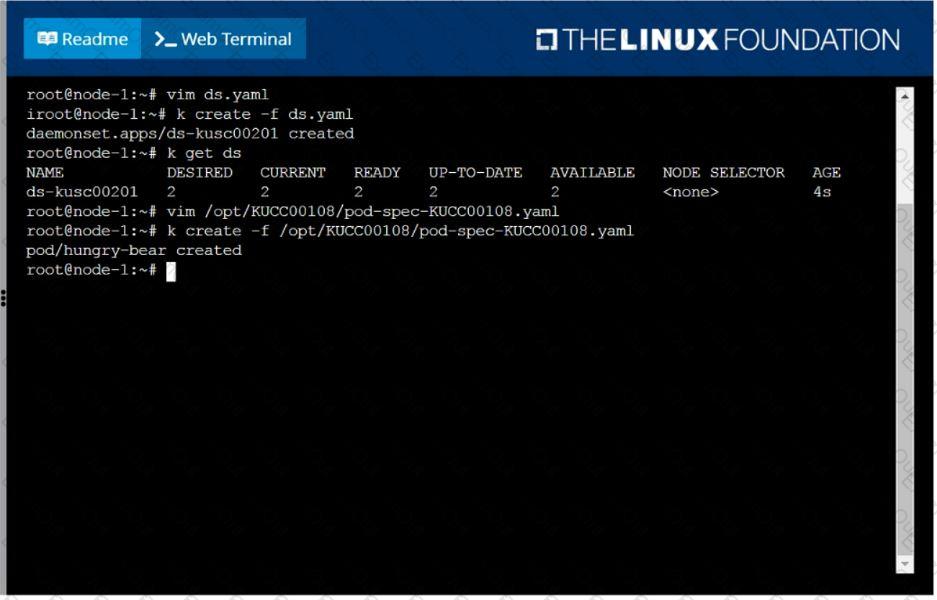

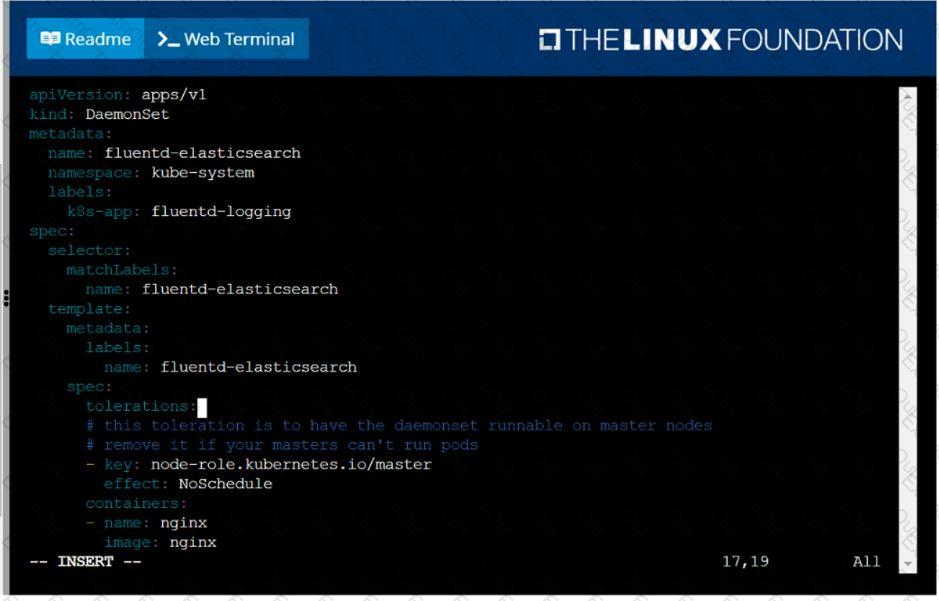

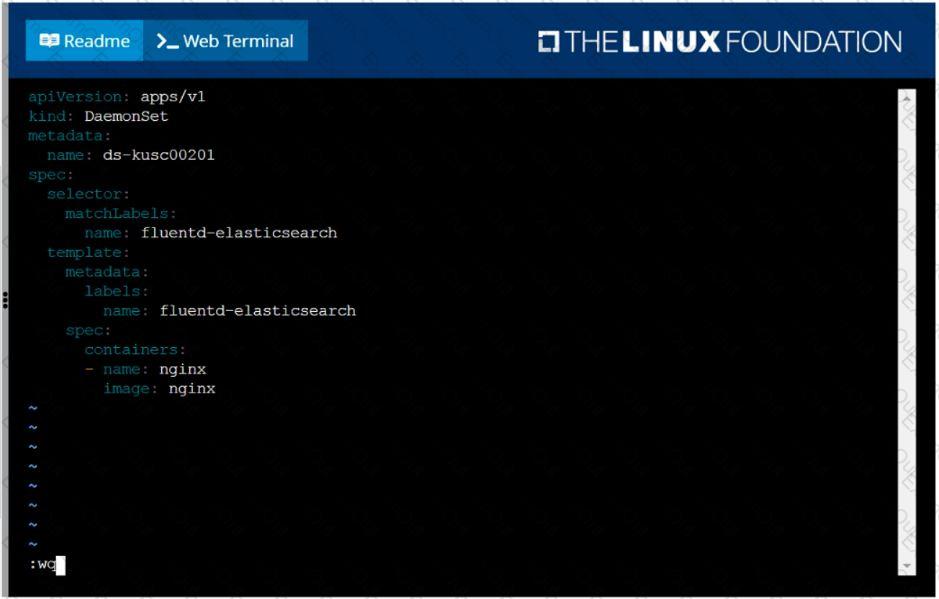

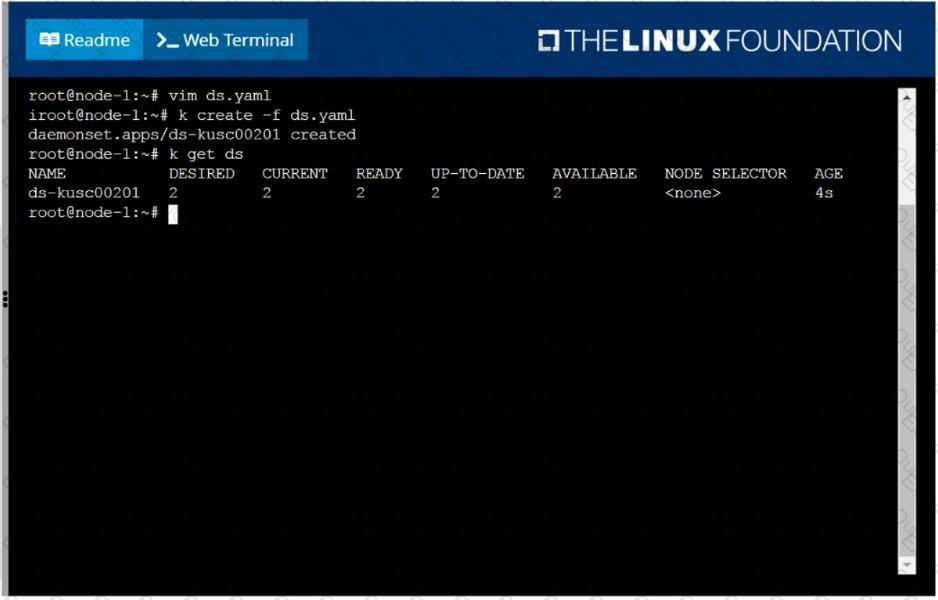

Ensure a single instance of pod nginx is running on each node of the Kubernetes cluster where nginx also represents the Image name which has to be used. Do not override any taints currently in place.

Use DaemonSet to complete this task and use ds-kusc00201 as DaemonSet name.

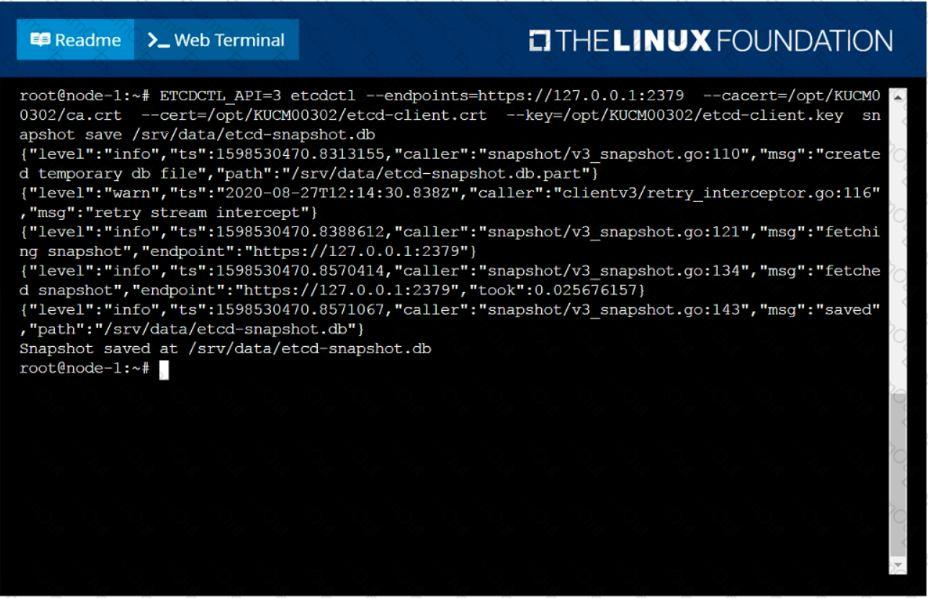

Create a snapshot of the etcd instance running at https://127.0.0.1:2379, saving the snapshot to the file path /srv/data/etcd-snapshot.db.

The following TLS certificates/key are supplied for connecting to the server with etcdctl:

CA certificate: /opt/KUCM00302/ca.crt

Client certificate: /opt/KUCM00302/etcd-client.crt

Client key: Topt/KUCM00302/etcd-client.key

F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 B.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 B.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 C.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 C.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 D.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\4 D.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 C.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 C.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 D.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 D.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 E.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\17 E.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 B.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 B.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 C.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 C.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 D.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 D.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 E.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\3 E.JPG F:\Work\Data Entry Work\Data Entry\20200827\CKA\18 C.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\18 C.JPG