A client has multiple warehouses where orders can be fulfilled. The cost of shipping goods from each warehouse varies by day, due to the number of workers available. The Architect needs to make sure that when an order is shipped, it is shipped from the lowest cost warehouse that is open.

How should this functionality be implemented?

An existing Adobe Commerce website is moving to a headless implementation.

The existing website features an "All Brands'' page, as well as individual pages for each brand. All brand-related pages are cached in Varnish using tags in the same manner as products and categories.

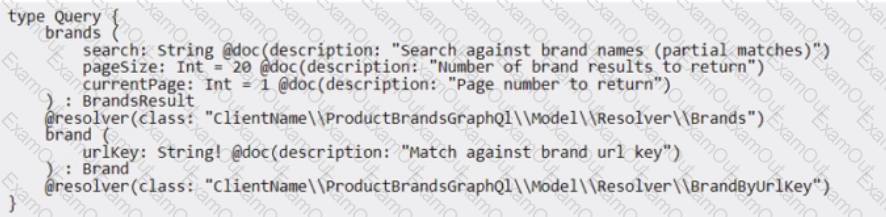

Two new GraphQL queries have been created to make this information available to the frontend for the new headless implementation:

During testing, the queries sometimes return out-of-date information. How should this problem be solved while maintaining performance?

Since the last production deployment, customers can not complete checkout.

The error logs show the following message multiple times:

main.CRITICAL: Report ID: webapi-61b9fe83f0c3e; Message: Infinite loop detected, review the trace for the looping path

The Architect finds a deployed feature that should limit delivery for some specific postcodes.

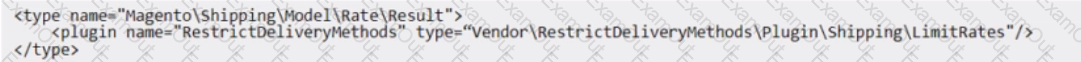

The Architect sees the following code deployed in etc/webapi_rest/di. xml and etc/frontend/di. Xml

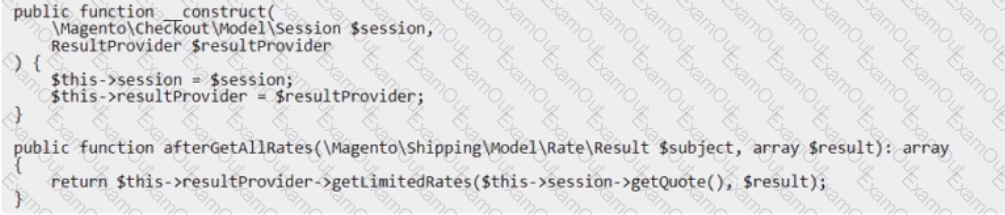

LimitRates.php:

Which step should the Architect perform to solve the issue?

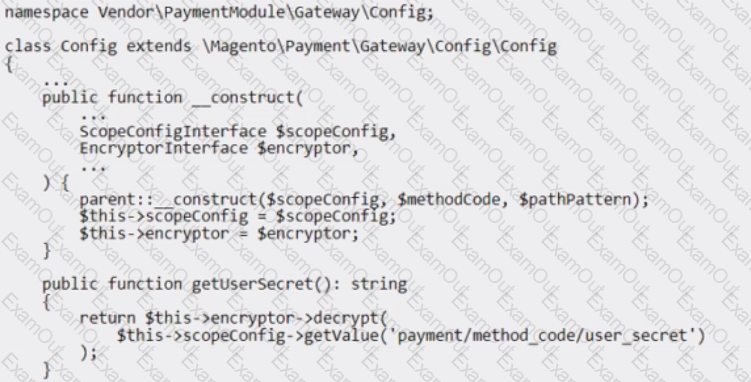

While reviewing a newly developed pull request that refactors multiple custom payment methods, the Architect notices multiple classes that depend on \Magento\Framework\Encryption\EncryptorInterface to decrypt credentials for sensitive data. The code that is commonly repeated is as follows:

The Architect needs to recommend an optimal solution to avoid redundant dependency and duplicate code among the methods. Which solution should the Architect recommend?

A custom cron job has been added to an Adobe Commerce system to collect data for several reports. Its crontab. xml configuration is as follows:

The job is data intensive and runs for between 20 and 30 minutes each night.

Within a few days of deployment, it is noticed that the sites sitemap. xml file has not been updated since the new job was added.

What should be done to fix this issue?

An Adobe Commerce Architect is troubleshooting an issue on an Adobe Commerce Cloud project that is not yet live.

The developers copied the Staging Database to Production in readiness to Go Live. However, when the developers test their Product Import feature, the new products do not appear on the front end.

The developers suspect the Varnish Cache is not being cleared. Staging seems to work as expected. Production was working before the database migration.

What is the likely cause?

A developer needs to uninstall two custom modules as well as the database data and schemas. The developer uses the following command: bin/magento module:uninstall Vendor_SampleMinimal Vendor_SampleModifyContent

When the command is run from CLI, the developer fails to remove the database schema and data defined in the module Uninstall class. Which three requirements should the Architect recommend be checked to troubleshoot this issue? (Choose three.)

An Adobe Commerce Architect is supporting deployment and building tools for on-premises Adobe Commerce projects. The tool is executing build scripts on a centralized server and using an SSH connection to deploy to project servers.

A client reports that users cannot work with Admin Panel because the site breaks every time they change interface locale.

Considering maintainability, which solution should the Architect implement?

An Architect needs to review a custom product feed export module that a developer created for a merchant. During final testing before the solution is deployed, the product feed output is verified as correct. All unit and integration tests for code pass.

However, once the solution is deployed to production, the product price values in the feed are incorrect for several products. The products with incorrect data are all currently part of a content staging campaign where their prices have been reduced.

What did the developer do incorrectly that caused the feed output to be incorrect for products in the content staging campaign?

An Architect is investigating a merchant's Adobe Commerce production environment where all customer session data is randomly being lost. Customer session data has been configured to be persisted using Redis, as are all caches (except full page cache, which is handled via Varnish).

After an initial review, the Architect is able to replicate the loss of customer session data by flushing the Magento cache storage, either via the Adobe Commerce Admin Panel or running bin/magento cache: flush on the command line. Refreshing all the caches in the Adobe Commerce Admin Panel or running bin/magento cache: clean on the command line does not cause session data to be lost.

What should be the next step?