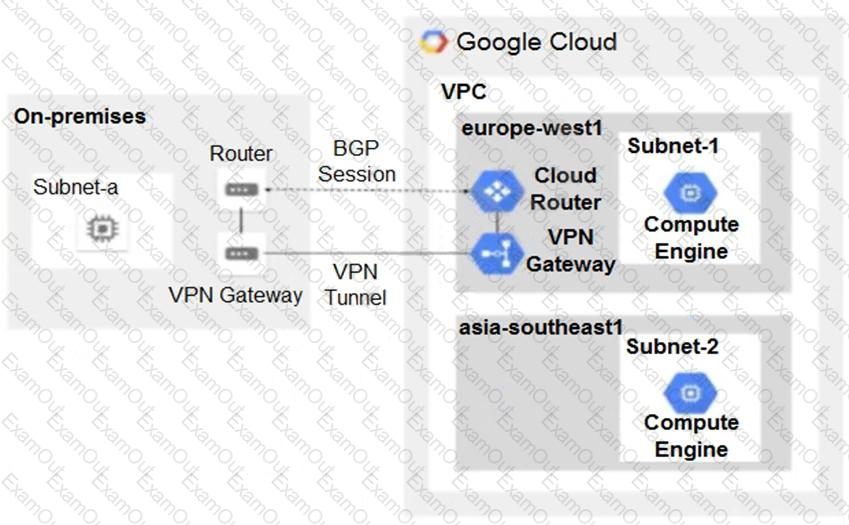

You have the following routing design. You discover that Compute Engine instances in Subnet-2 in the asia-southeast1 region cannot communicate with compute resources on-premises. What should you do?

You have applications running in the us-west1 and us-east1 regions. You want to build a highly available VPN that provides 99.99% availability to connect your applications from your project to the cloud services provided by your partner's project while minimizing the amount of infrastructure required. Your partner's services are also in the us-west1 and us-east1 regions. You want to implement the simplest solution. What should you do?

Your on-premises data center has 2 routers connected to your GCP through a VPN on each router. All applications are working correctly; however, all of the traffic is passing across a single VPN instead of being load-balanced across the 2 connections as desired.

During troubleshooting you find:

•Each on-premises router is configured with the same ASN.

•Each on-premises router is configured with the same routes and priorities.

•Both on-premises routers are configured with a VPN connected to a single Cloud Router.

•The VPN logs have no-proposal-chosen lines when the VPNs are connecting.

•BGP session is not established between one on-premises router and the Cloud Router.

What is the most likely cause of this problem?

You successfully provisioned a single Dedicated Interconnect. The physical connection is at a colocation facility closest to us-west2. Seventy-five percent of your workloads are in us-east4, and the remaining twenty-five percent of your workloads are in us-central1. All workloads have the same network traffic profile. You need to minimize data transfer costs when deploying VLAN attachments. What should you do?

You are designing an IP address scheme for new private Google Kubernetes Engine (GKE) clusters. Due to IP address exhaustion of the RFC 1918 address space In your enterprise, you plan to use privately used public IP space for the new clusters. You want to follow Google-recommended practices. What should you do after designing your IP scheme?

Your company has a single Virtual Private Cloud (VPC) network deployed in Google Cloud with access from on-premises locations using Cloud Interconnect connections. Your company must be able to send traffic to Cloud Storage only through the Interconnect links while accessing other Google APIs and services over the public internet. What should you do?

Your company is planning a migration to Google Kubernetes Engine. Your application team informed you that they require a minimum of 60 Pods per node and a maximum of 100 Pods per node Which Pod per node CIDR range should you use?

You manage two VPCs: VPC1 and VPC2, each with resources spread across two regions. You connected the VPCs with HA VPN in both regions to ensure redundancy. You’ve observed that when one VPN gateway fails, workloads that are located within the same region but different VPCs lose communication with each other. After further debugging, you notice that VMs in VPC2 receive traffic but their replies never get to the VMs in VPC1. You need to quickly fix the issue. What should you do?

There are two established Partner Interconnect connections between your on-premises network and Google Cloud. The VPC that hosts the Partner Interconnect connections is named "vpc-a" and contains three VPC subnets across three regions, Compute Engine instances, and a GKE cluster. Your on-premises users would like to resolve records hosted in a Cloud DNS private zone following Google-recommended practices. You need to implement a solution that allows your on-premises users to resolve records that are hosted in Google Cloud. What should you do?

Your organization uses a Shared VPC architecture with a host project and three service projects. You have Compute Engine instances that reside in the service projects. You have critical workloads in your on-premises data center. You need to ensure that the Google Cloud instances can resolve on-premises hostnames via the Dedicated Interconnect you deployed to establish hybrid connectivity. What should you do?